Tech

Soumitra Dutta’s Insights on Building a Future-Ready AI Strategy

Artificial intelligence is transforming the way organizations operate and make decisions. However, realizing its full potential requires more than adopting algorithms—it demands a strategic approach that aligns with broader business goals.

From laying a future-proof foundation to selecting practical usage, companies must consider readiness, talent, and ethical implications. As Soumitra Dutta points out, the most successful AI strategies are those that evolve continuously, remain adaptable to change, and are built on a solid understanding of current capabilities.

Defining a Future-Ready AI Approach

A future-ready AI strategy is built to adapt and grow alongside emerging technologies and shifting market demands. It’s not just about deploying the latest tools, but ensuring the foundation can scale and flex as needs change.

Companies investing in AI today must think beyond short-term performance gains. A forward-looking approach prioritizes long-term usefulness, which means designing systems that can integrate with new data sources, support evolving workflows, and remain effective as regulations change.

In healthcare, AI tools supporting diagnostics need to accommodate ongoing updates in clinical guidelines, patient data models, and even regional policy shifts. Being future-ready also means preparing for uncertainty. Technology cycles are accelerating, and businesses that build AI strategies with built-in flexibility are more likely to lead rather than follow.

Connecting AI and Business Goals

AI becomes meaningful when it directly contributes to business success. Aligning AI efforts with strategic objectives ensures resources are channeled toward solving real organizational challenges, whether that’s enhancing customer experiences, reducing operational inefficiencies, or anticipating market trends. Without this alignment, even the most advanced models risk becoming disconnected experiments with little impact.

Leadership support plays a pivotal role. When executives champion the integration of AI into company strategy, it signals commitment and encourages collaboration across departments. In retail, aligning AI with supply chain forecasting has helped companies reduce overstock, respond faster to demand shifts, and optimize inventory cycles—showing how strategic alignment drives measurable results and positive ROI.

Evaluating Current Readiness and Infrastructure

Before launching any AI efforts, organizations should know what they already have. This means taking stock of data quality, technical architecture, and internal expertise. Many teams uncover that while they have data, it’s siloed, inconsistent, or not easily accessible—barriers that can stall even the most promising AI initiatives and create friction in deployment timelines.

It’s also important to assess whether the current infrastructure can support iterative model development and deployment. Some companies realize too late that legacy systems can’t integrate with modern AI tools, slowing down progress. A clear-eyed audit of systems and workflows helps avoid these pitfalls and sets a realistic baseline for growth.

Selecting High-Impact, Practical Use Cases

Choosing the right starting points is essential for building traction. Projects that deliver value quickly while laying the groundwork for broader changes often gain more support and momentum. In financial services, fraud detection models that reduce losses while improving customer trust have proven to be both impactful and approachable. These early wins build credibility and pave the way for more ambitious AI efforts across the enterprise.

Not every use case is worth pursuing. Some ideas may sound innovative but lack a clear business outcome or require data that’s incomplete or unreliable. Focusing on initiatives with strategic relevance and operational feasibility helps teams avoid wasted effort and accelerates adoption. Organizations that take the time to validate use case assumptions up front are more likely to produce outcomes that scale effectively.

Developing Talent and Promoting Responsible AI

The success of any AI initiative depends on the people behind it. A mix of domain experts, data scientists, engineers, and product leaders ensures that solutions are not only technically sound but also grounded in real-world needs. Organizations that invest in upskilling existing staff often see faster adoption, stronger internal alignment, and greater innovation from cross-functional collaboration.

Responsible AI is not an afterthought. It must be embedded from the start. Whether it’s reducing model bias in hiring platforms or ensuring transparency in loan approval systems, ethical considerations shape trust and long-term viability. Teams that prioritize fairness and compliance early on are better positioned to navigate public scrutiny and regulatory shifts.

As models encounter new data and shifting user behavior, they need to be retrained and refined. Monitoring performance over time reveals patterns that aren’t always obvious in early testing. This feedback loop is what separates short-lived pilots from sustainable AI products.

Disclaimer: This is a sponsored piece of content. Time Bulletin journalists or editorial staff were not involved in the production or writing of this content.

-

Education4 weeks ago

Education4 weeks agoBelfast AI Training Provider Future Business Academy Reaches Milestone of 1,000 Businesses Trained

-

Sports3 weeks ago

Sports3 weeks agoUnited Cup 2026: Full Schedule, Fixtures, Format, Key Players, Groups, Teams, Where and How to Watch Live

-

Tech6 days ago

Tech6 days agoAdobe Releases New AI-powered Video Editing Tools for Premiere and After Effects with Significant Motion Design Updates

-

Book3 weeks ago

Book3 weeks agoAuthor, Fighter, Builder: How Alan Santana Uses His Life Story to Empower the Next Generation Through UNPROTECTED

-

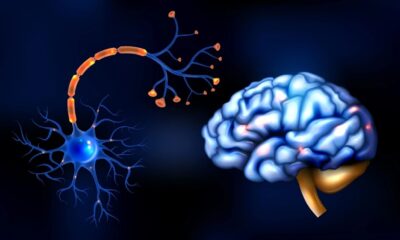

Health4 weeks ago

Health4 weeks agoNew Research and Treatments in Motor Neurone Disease

-

Science4 weeks ago

Science4 weeks agoJanuary Full Moon 2026: Everything You Should Need to Know, When and Where to See Wolf Supermoon

-

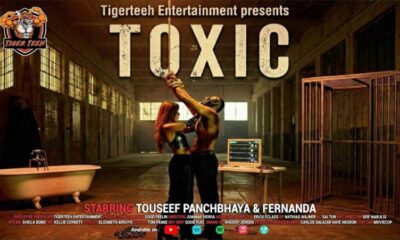

Entertainment4 weeks ago

Entertainment4 weeks agoTigerteeh aka Touseef Panchbhaya Drops His Latest Hindi Track “Toxic”

-

Business2 weeks ago

Business2 weeks agoSpartan Capital Publishes 2026 Economic Outlook, Highlighting Volatility, Resilience, and Emerging Opportunities